Our Mission

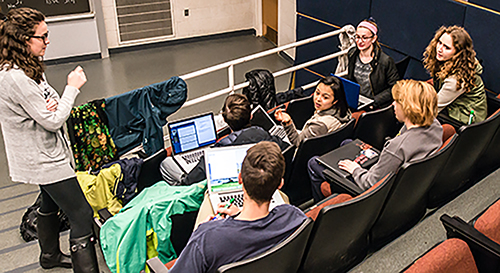

The mission of the Center for Research on Learning and Teaching (CRLT) is to promote excellence and innovation in teaching in all 19 schools and colleges at the University of Michigan.

CRLT is dedicated to the support and advancement of evidence-based learning and teaching practices and the professional development of all members of the campus teaching community. CRLT partners with faculty, graduate students, postdocs, and administrators to develop and sustain a university culture that values and rewards teaching, respects and supports individual differences among learners, and creates learning environments in which diverse students and instructors can excel.